Introduction

In today’s digital landscape, where users expect lightning-fast and seamless web experiences, performance optimization is critical. Lazy loading has been a cornerstone technique in web development, enabling faster page loads by deferring the initialization of non-critical resources—such as images, videos, or JavaScript modules—until they are needed. This approach reduces initial page weight, conserves bandwidth, and enhances user satisfaction, particularly on resource-constrained devices or networks.

However, as user expectations and technological capabilities evolve, lazy loading is undergoing a transformation. The integration of Artificial Intelligence (AI), Edge Computing, and emerging technologies like WebAssembly is redefining lazy loading, making it smarter, more adaptive, and user-centric. This comprehensive guide analyzes the evolution of lazy loading, provides practical code implementations with conceptual outputs, and explores future trends that promise to shape its next frontier(policy) frontier.

Section 1: Traditional Lazy Loading

Lazy loading, at its core, is about efficiency. By delaying the loading of non-essential resources until they are needed—typically when they enter the user’s viewport—developers can significantly reduce initial page load times. This is particularly effective for content-heavy websites, such as e-commerce platforms or media galleries.

Code Implementation: Traditional Lazy Loading

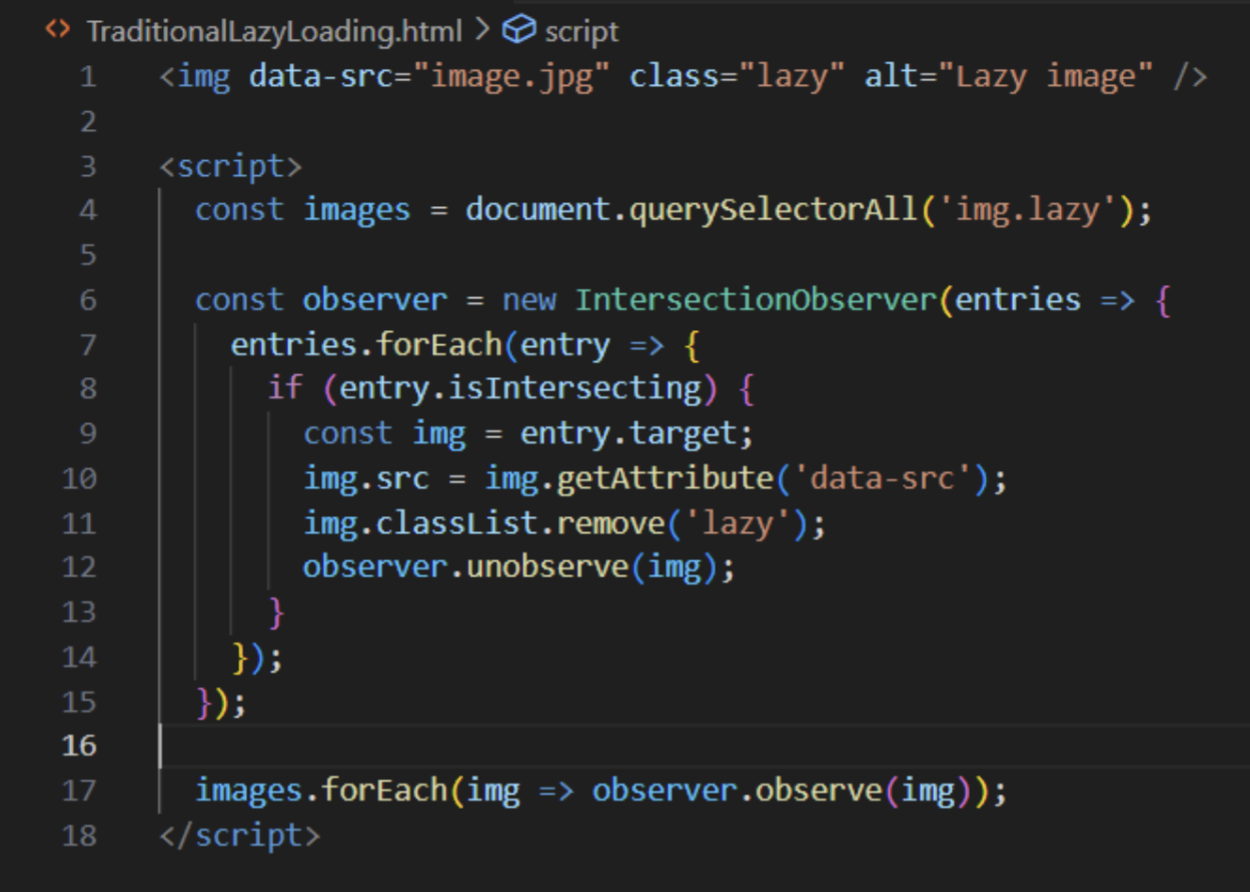

Here’s a classic example of lazy loading images using the IntersectionObserver API:

How It Works

- The data-src attribute stores the actual image URL, while a lightweight placeholder (or no src) is used initially.

- The IntersectionObserver detects when the image enters the viewport, triggering the load of the actual image.

- Once loaded, the image is removed from observation to optimize performance.

Output (Conceptual)

When the page loads, only placeholders (e.g., low-resolution images or empty spaces) are visible. As the user scrolls, images load seamlessly as they enter the viewport, reducing initial load time and improving perceived performance.

Limitations

Traditional lazy loading relies on static rules, such as viewport visibility, which do not account for user behavior, device capabilities, or network conditions. This limitation has spurred the development of more intelligent approaches.

Section 2: AI-Enhanced Lazy Loading

AI is revolutionizing lazy loading by introducing predictive and context-aware capabilities. By analyzing user behavior, preferences, and contextual data, AI can preload resources that are likely to be needed, prioritize content based on relevance, and adapt to varying conditions like network speed or device type.

Key Benefits

- Predictive Preloading: AI predicts user actions, such as scrolling to a specific section, and preloads relevant content.

- Intelligent Prioritization: Content is loaded based on engagement metrics or user intent, ensuring the most relevant resources are available first.

- Adaptive Loading: AI adjusts loading strategies based on real-time factors like bandwidth or battery level.

Code Implementation: AI-Enhanced Lazy Loading

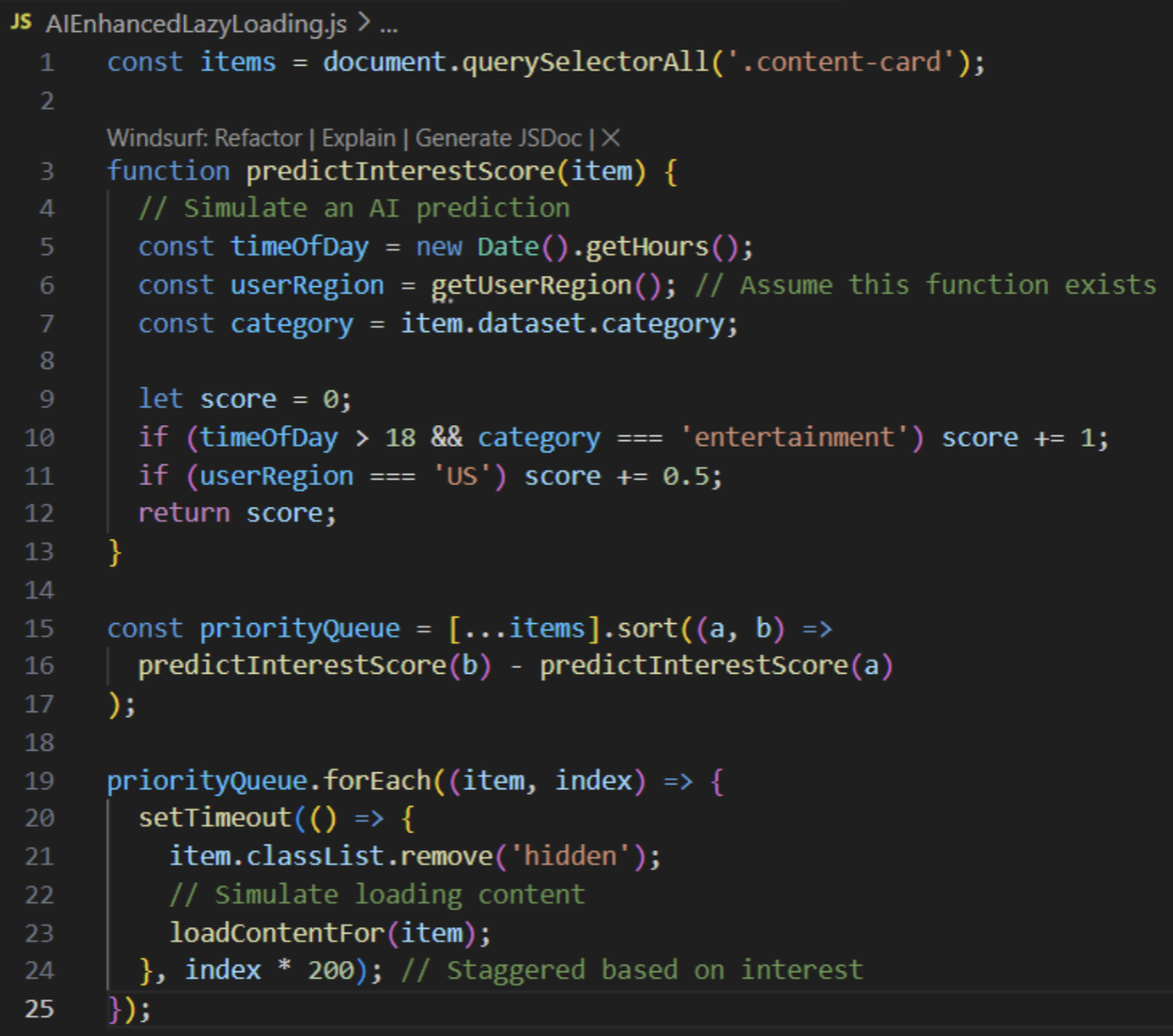

Below is a simplified example simulating AI-driven lazy loading by prioritizing content based on a predicted interest score:

How It Works

- Each content card (e.g., a product or article) is assigned an interest score based on factors like time of day and user region.

- Cards are sorted by score, and higher-priority content is loaded first with a slight delay to stagger resource demands.

- In a real-world scenario, predictInterestScore would leverage a machine learning model trained on user interaction referenced data.

Output (Conceptual)

Real-World Example

Companies like Netflix (Netflix) use AI-driven lazy loading to preload preview thumbnails or video player modules only when users hover or click, minimizing initial page load and tailoring content delivery based on user interaction.

Section 3: Edge Computing and Lazy Loading

Edge computing brings data processing and storage closer to the user through geographically distributed servers, significantly reducing latency. When combined with lazy loading, edge computing enables faster content delivery and smarter loading decisions based on user location, device type, and network conditions.

Key Benefits

- Ultra-Fast Load Times: Content is served from nearby edge servers, reducing latency.

- Context-Aware Decisions: Edge servers can tailor content delivery based on real-time data, such as serving compressed images for slow connections.

- Scalability and Resilience: Edge-enabled lazy loading ensures efficient content delivery even under high traffic or network strain.

Code Implementation: Edge-Aware Lazy Loading

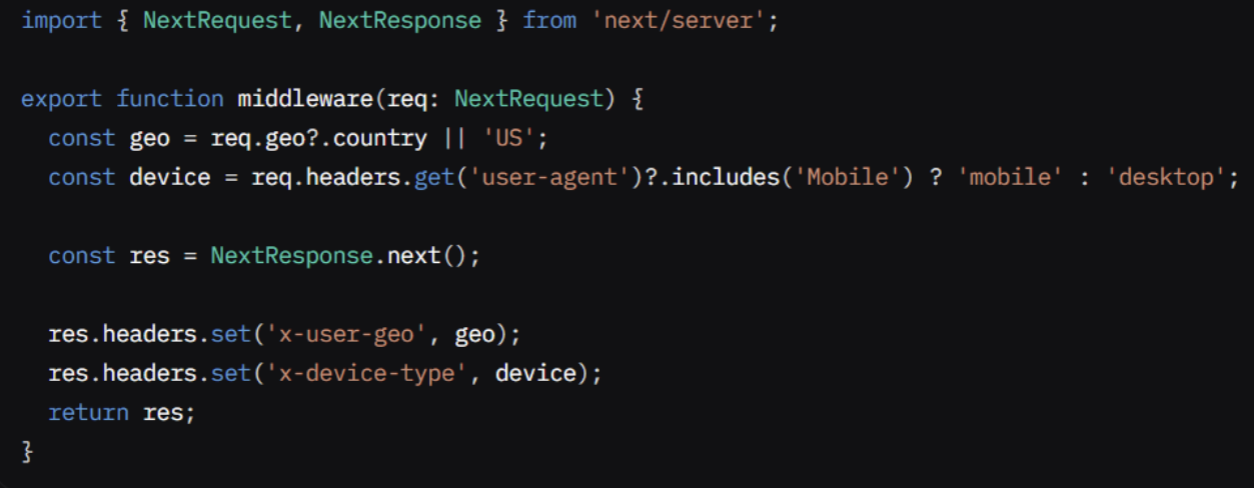

Using Next.js with Vercel’s Edge Middleware for context-aware lazy loading:

How It Works

- The middleware sets headers for user location and device type.

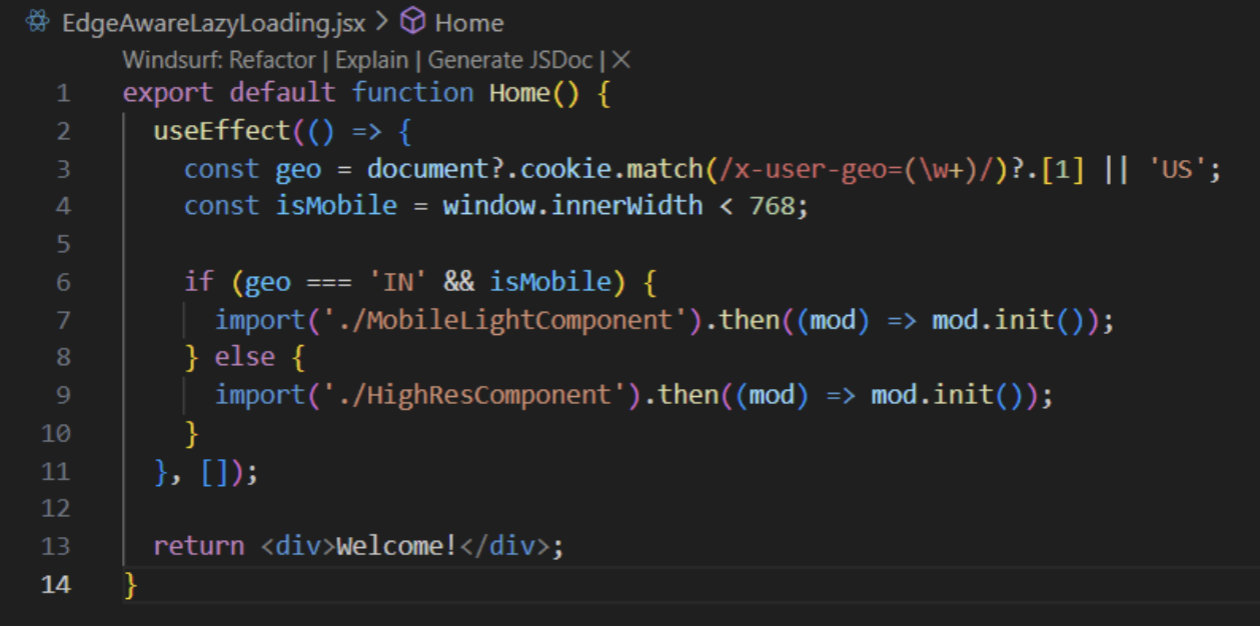

- The React component dynamically imports either a lightweight or high-resolution component based on these headers.

- This ensures optimized content delivery tailored to the user’s context.

Output (Conceptual)

- A mobile user in India loads a lightweight component, reducing data usage and improving speed.

- A desktop user in the US loads a high-resolution component, leveraging faster network capabilities.

- The result is a tailored, efficient user experience with minimal latency.

Real-World Example

Airbnb (Airbnb) employs edge-aware lazy loading to dynamically load listing details, maps, and images only when needed, optimizing performance for users on slower mobile networks.

Section 4: WebAssembly and Lazy Loading

WebAssembly (WASM) is a binary instruction format that enables high-performance code (e.g., written in C++ or Rust) to run in the browser at near-native speed. When integrated with lazy loading, WASM allows performance-critical modules, such as image decoders or video players, to be loaded on demand, reducing initial page weight.

Potential Application

- High-Performance Modules: Lazy loading WASM modules for tasks like image processing or 3D rendering in gaming and visualization apps.

- Efficient Resource Use: Loading only the necessary modules when triggered by user actions, such as activating a feature.

While specific WASM examples are less common, the potential for lazy-loaded, high-performance modules is significant, particularly for resource-intensive applications.

Section 5: Future Trends in Lazy Loading

The future of lazy loading is poised to be transformative, driven by advancements in AI, edge computing, and related technologies. Key trends include:

| Trend | Description |

| AI-Powered Preloading | Predicting user behavior to preload relevant content proactively. |

| Edge-Aware Lazy Loading | Dynamic content delivery from edge servers based on user context. |

| WASM Lazy Modules | Efficient, high-performance binary modules loaded on demand. |

| Personalization at Runtime | Tailoring content loading based on user preferences and behavior. |

| Lazy APIs & Microservices | Triggering backend endpoints only when needed, optimizing server resources. |

These trends indicate a shift toward intelligent, adaptive, and distributed loading strategies that prioritize user experience.

Challenges and Considerations

- Privacy: AI-driven personalization must balance performance with user data protection, ensuring compliance with regulations like GDPR.

- Debugging Complexity: Dynamic loading logic can be challenging to trace and test, necessitating advanced tooling.

- Standardization: Evolving industry standards are needed to ensure compatibility across platforms and frameworks.

Conclusion

Lazy loading has evolved from a simple optimization technique to a sophisticated, intelligent strategy powered by AI and edge computing. By predicting user needs, prioritizing content, and leveraging nearby servers, developers can create web experiences that are fast, personalized, and resource-efficient. The integration of WebAssembly and other emerging technologies further enhances the potential for high-performance, on-demand loading. As developers embrace these advancements, lazy loading will continue to play a pivotal role in delivering smarter, faster, and more empathetic digital experiences.

Shobhit Srivastava is an dedicated Software Engineer at Valiance Solutions, passionate about building scalable, secure, and innovative cloud-native solutions. Shobhit excels in managing containerized and serverless deployments, and optimizing CI/CD pipelines. He combines deep expertise in distributed systems and AI-driven innovation to drive digital transformation. A proactive problem-solver, Shobhit thrives on continuous learning, delivering high-performance systems, and contributing to impactful solutions in dynamic, challenging environments.