Adoption Without Sovereignty Is Not Enough

In today’s public-sector environments, where huge quantities of citizen data is generated, managed, and analyzed daily, digital transformation by itself can not provide national security. AI is being used by governments in the areas of infrastructure, national security, citizen services, finance, procurement, and safety. Faster automation does not, however, always result in safer, more independent decision-making. While there is data, intelligence control can often be lacking.

These days, public institutions function in complex, high-stakes environments. National security, geopolitical risk, regulatory duty, and citizen trust are all linked. Implementing AI systems that are safe, open, compatible with laws, and completely controlled within national borders is obviously expected.

But in practice, this promise is often unfulfilled.

Most public-sector AI projects fail at the trial level, despite the fact that AI use is increasing across government agencies, regulators, and public-sector utilities. Although systems show technical promise, they are unable to scale into organized, long-term deployments. Progress without sovereignty and innovation without long-term control are the outcomes.

AI development is no longer the largest barrier.

Only when intelligence is still under sovereign control does public data become national power.

Governments can start to close the gap between testing and long-term national capabilities via Sovereign AI, which will guarantee that public intelligence benefits citizens rather than outside platforms.

Limitations of Traditional Government AI Models

Traditional government uses of AI often depend on externally managed platforms, inconsistent data ecosystems, and foreign infrastructure. Organizations believe that progress is guaranteed by advanced technology, but expanding in the real world calls for long-term operational ownership and governance discipline.

Among the restrictions are:

- Foreign reliance: Outside companies frequently manage the core AI infrastructure.

- Risks to data sovereignty: It is possible for sensitive citizen data to be processed or stored outside of national borders.

- Fragmented data ecosystems: Public data is scattered across ministries, legacy systems, and incompatible formats

- Decisions made by ineffective AI: limited ability to see how results are produced

- Diffused oversight: The results of AI are not only the responsibility of one organizational owner.

- Pilot-driven execution: Projects are handled more as tests than as long-term systems.

Although these systems might be technically successful, national control is not guaranteed. Intelligence loses strategic value when governance is outsourced.

Current Realities in Government AI and Data Security

AI has been recognized as a strategic goal by the Indian public sector. The procurement process, regulation, finance, infrastructure, security, and citizen services are all using AI. However, just a small number of projects make the move from trials to large-scale production systems.

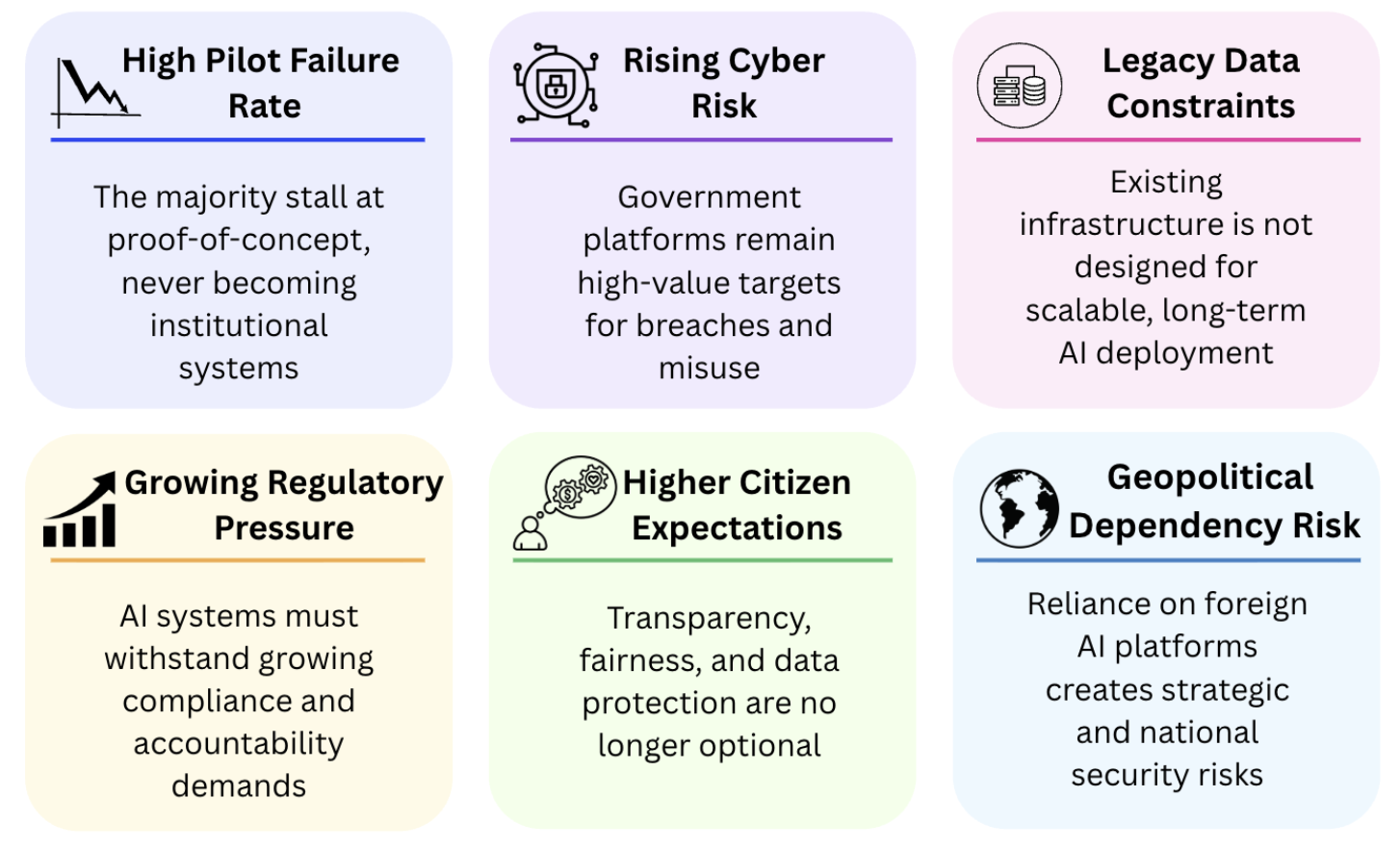

These days, public-sector AI environments are defined by:

These facts show an increasing gap: More AI-driven insights are being produced by governments than before.

However, they do not have complete control over the governance, expansion, and maintenance of intelligence.

Key Challenges Without Sovereign AI in Government

Constant issues arise when AI is still pilot-driven or regulated by external oversight:

- Pilots are ceased: Systems fail to move from testing to installation

- Absence of institutional ownership: It’s still unclear who is responsible for the impact

- Blind spots in governance: Issues with transparency and compliance come out too late

- Functional disparity: AI is still used as a complement to processes rather than as part of them.

- The decline of public trust: Growing worries about privacy, justice, and abuse

- Strategic dependency: The dependence of national intelligence on external ecosystems

These difficulties highlight a basic fact: Success with AI in government is more dependent on sovereignty and governance than on technology.

How Sovereign AI Transforms Government Intelligence

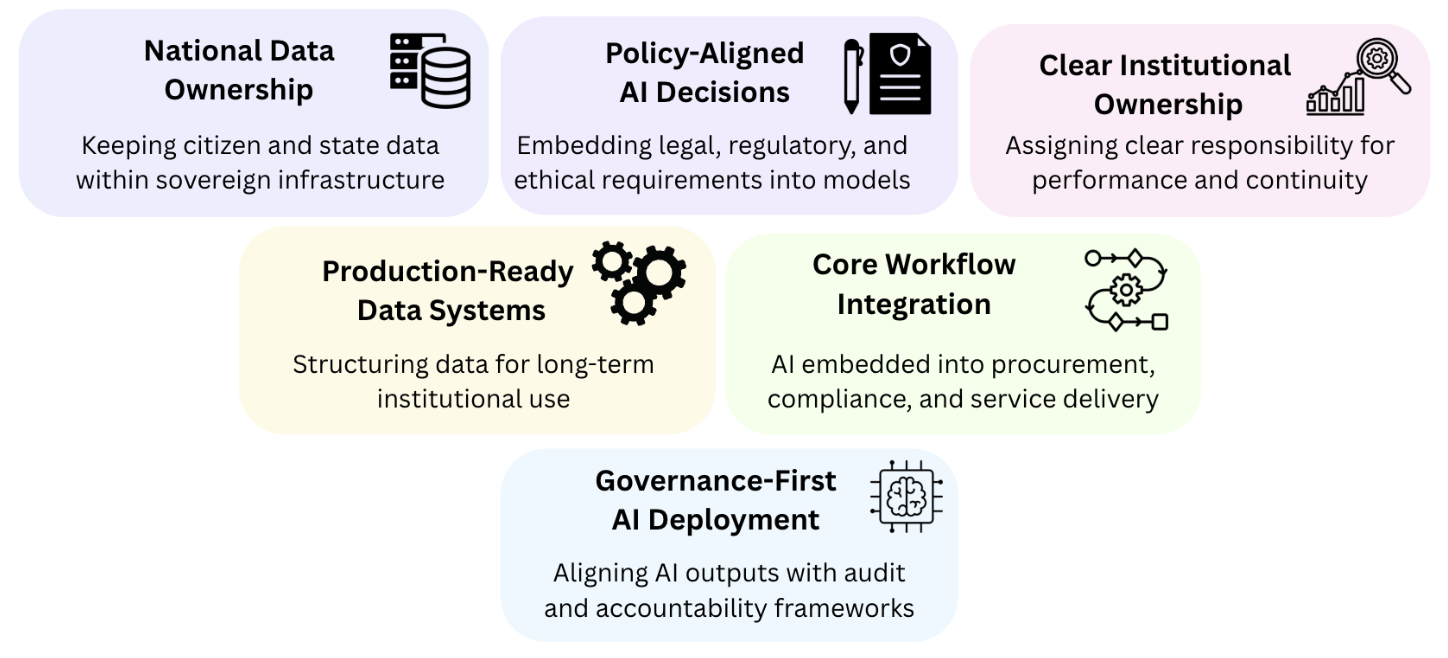

Sovereign AI replaces nationally owned, institutionally controlled, and policy-aligned systems for intelligence under external control. Sovereign AI explores if intelligence can function reliably year after year under governance, control, and operational structures, rather than whether AI can function technically.

Instead of posing the question, “Which AI platform should we adopt?”

“How do we control our data, govern intelligence, and establish AI within national systems?” ask governments with sovereign artificial intelligence.

This change makes it possible for:

This development is important. Governments move from AI experimentation to intelligence adoption.

Why Sovereign AI Matters More Than Traditional AI Adoption

The true issue is sustainability at scale, not technological feasibility, as governments hurry the use of AI.

Models’ poor performance is not the reason why public-sector AI fails.

Systems are not built to survive social responsibility, data division, oversight from regulators, and complex governance, which is why it fails.

Beyond automation, sovereign AI improves national resilience. It provides long-term, scalable public intelligence, safeguards citizen data, reduces reliance on foreign sources, merges AI into basic governance processes, and guarantees transparency.

AI in governance will be more than just intelligent in the future.It needs to be long-lasting, transparent, and independent.

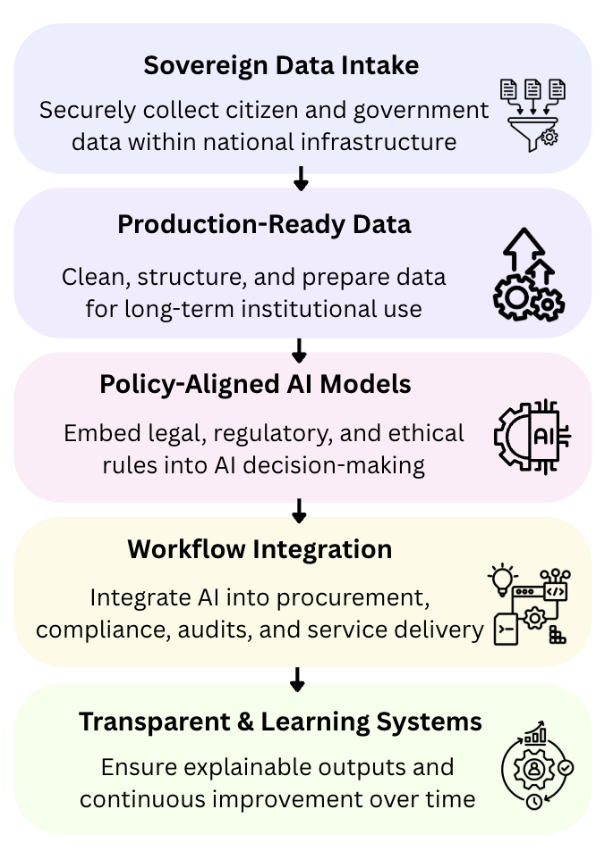

How Sovereign AI Works in Public-Sector Workflows

The expected lifespan of a Sovereign AI framework is intelligence-driven and governance-first:

Sovereign AI is not merely a technology stack.

It is in charge. It becomes socialized. It sustains.

Looking Ahead: The Future of Sovereign AI in Governance

Governments that have been prepared for the future will see AI as a long-term institutional ability rather than a one-time technological effort. As AI gets built into public procedures, the main concerns will no longer be how to use it, but rather who will be in charge of intelligence, how it will be managed, and who will be held responsible when it affects laws and regulations.

Public institutions will increasingly need systems that can:

- Maintain sovereign authority over national intelligence and citizen data.

- Move AI from pilot to long-lasting, high-quality institutional structures

- Align AI results with governance, inspection, legal, and regulatory structures

- Bring intelligence into everyday operations and key government activities. Reduce dependence on outside AI ecosystems and foreign technology

- Enhance national experts, organizations, and environments to increase local AI capability.

- Maintain the trust of the public with transparency, responsibility, and supervision.

Whoever has the largest datasets or the most powerful computers won’t define sovereign AI. It will be determined by who produces knowledge in line with the logic of government, the national context, and the public desire as well as who maintains the capacity to come in, offer an argument, and reverse direction.

AI will continue to be important to the operation of government.

Independent knowledge, not assigned to choices, will ultimately decide national resilience.

At Valiance Solutions, we believe that Autonomous AI is an organizational and regulatory goal rather than a technology challenge. In addition to innovation, sustainable public- sector AI needs control, organizational seriousness, and long-term operational control.

Based from real-world experience in private companies, academic institutions, and governmental organizations, our Sovereign AI capabilities allow:

- AI systems of the quality of production that can grow beyond trials

- Systems for independent data that satisfy with national security requirements

- AI systems that focus control and are built for control, security, and transparency

- Direct introduction of AI into public sector activities and workflows

- Systems of institutional ownership that guarantee effect, responsibility, and continuity

More experimental AI is not necessary for governments.

They require intelligence that is responsible, long-lasting, sovereign, and developed with the interests of the country in mind.