In the fast-paced world of technology, you may have heard the buzz around Kubernetes. But what exactly is it, and why are major tech players making the shift towards it? If these questions have sparked your curiosity, you’re in the right place. This blog aims to unravel the mystery behind Kubernetes in plain and simple language, making it accessible to anyone, regardless of their technical background.

Think of Kubernetes as a conductor directing a digital landscape symphony of apps and services. Giants like Google, Microsoft, and Amazon are interested in this potent instrument, but why? What makes Kubernetes so exciting in the tech community?

We’ll begin our adventure by delving into the “why.” What are the advantages of Kubernetes that these IT giants are embracing? We’ll get to the “what” after we’ve understood the why. In plain English, what is Kubernetes, without getting bogged down in technical details? Lastly, we will conclude with the “how.” How can Kubernetes be implemented on a real cluster while gradually demystifying the process?

Join us as we explore the world of Kubernetes, whether you’re a tech enthusiast, an inquisitive learner, or someone simply trying to understand the major changes occurring in the digital world. By the time you finish, you’ll understand not only why the biggest IT companies are moving, but also how Kubernetes works and how you can use it in your own virtual playground.

Let’s dive in!

The Why Way

Two architectural philosophies have gained influence in the rapidly changing field of technology: the conventional monolithic model and the contemporary microservices design. Large software companies have been moving towards microservices lately, and Kubernetes, a potent orchestration technology, is at the center of this movement.

What are Microservices & Monolithic Models?

Microservices: A Symphony of Independence

With a microservices architecture, a large, complicated program is divided up into smaller, autonomous services. Every service runs independently and communicates with other services via specified APIs. This facilitates easier maintenance, scalability, and adaptability.

Monolithic: The Unified Behemoth

Conversely, monolithic architecture consists of a single, closely-knit structure with all of its parts connected. Although these applications are easier to scale and change at first, as they get larger, they can become more difficult to handle.

Why the Shift to Microservices?

- Agility and Flexibility: Microservices allow quick changes and feature additions without affecting the entire system.

- Scalability: Microservices ensure efficient resource usage by simplifying the scalability of only the necessary components.

- Fault Isolation: In microservices, if one service fails, it doesn’t bring down the entire application. This ensures higher reliability.

How Microservices are related to Kubernetes?

Similar to a traffic controller for microservices is Kubernetes. It makes sure containerized apps function smoothly across a cluster of servers by orchestrating their deployment, scaling, and management.

Handling Microservices with Kubernetes

- Automation: By automating repetitive processes, Kubernetes facilitates the management and scalability of microservices.

- Load balancing: It keeps a single microservice from becoming overloaded by distributing traffic among microservices equally.

- Self-Healing: Kubernetes detects and replaces failed microservices, ensuring continuous availability.

Kubernetes as the Standard

- Portability: Applications become more portable while using Kubernetes since it offers a uniform environment across many infrastructures.

- Community Support: A sizable and vibrant community guarantees frequent updates, assistance, and abundant user-generated content.

- Cost-Efficiency: Kubernetes optimizes resource utilization, which is cost-effective for any organization.

Real-Life Example:

Kubernetes has emerged as the standard for deploying and managing containerized applications. Most organizations are now implementing it. Netflix is one of the companies that has successfully adopted Kubernetes to efficiently manage and orchestrate containerized apps, enhancing its streaming capabilities. Here’s a quick illustration:

Use Case: Scalability and Content Delivery

Netflix uses Kubernetes to scale its infrastructure for content delivery dynamically in response to demand. Kubernetes automatically scales up the necessary microservices and containers to manage the increased load during periods of high viewership. This optimizes resource utilization and cost-effectiveness by scaling down the system during times of reduced demand and ensuring flawless streaming experiences for customers during peak times.

Essentially, Kubernetes automates the deployment, scaling, and maintenance of Netflix’s containerized apps, allowing the streaming giant to continue providing a responsive and dependable service.

Solving Real Problems

The issue of effectively scaling and managing containerized apps is resolved by Kubernetes. Because of its capacity to automate operations, scalability, and deployment, it is a vital tool for tech companies, ensuring that their applications function flawlessly in the rapidly evolving digital ecosystem of today.

In conclusion, the adoption of Kubernetes by tech heavyweights and the move towards microservices highlight a shared endeavor to embrace scalability, agility, and reliability. The partnership between Kubernetes and microservices will probably continue to be a major force behind efficiency and innovation in the digital space as technology develops.

The What Universe

Consider yourself the captain of a ship navigating the enormous and ever-changing digital application sea. Let me now present you to Kubernetes, your trustworthy first mate who will steer your ship through the constantly shifting currents of the virtual ocean. But first, what is Kubernetes, and how can it make the complicated world of application management easier to understand?

But let’s keep things basic first before we get too technical. Consider Kubernetes as a digital traffic controller, making sure every application has the resources it needs to run smoothly and without creating bottlenecks. It has to do with preserving peace in the fast-paced world of digital operations.

In this segment, we will dissect Kubernetes, investigating its essential elements and comprehending how it functions to streamline the deployment and administration of applications. So grab a seat, and join us as we explore the fundamentals of Kubernetes, your reliable first mate in the vast world of digital possibilities.

Understanding the Basics

What is Kubernetes?

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. Imagine it as the conductor of a containerized orchestra, ensuring each component plays its part harmoniously. Understanding Kubernetes’ role is the first step in grasping its significance.

Kubernetes Architecture

Several essential parts of Kubernetes work together to support your applications. The principal elements of architecture comprise:

- Master Node: This is your Kubernetes cluster’s central command center, where global cluster decisions (such scheduling and scaling) are made.

- Worker Nodes: Your containers operate on these computers. They ensure that your applications run as planned by following instructions from the Master Node.

- Kubelet: The agent on every worker node that facilitates communication between the node and the control plane is known as the Kubelet.

- etcd: All of the cluster’s configuration information is kept in this distributed key-value store.

- API Server: The Kubernetes control plane’s front end is the API Server. It executes, verifies, and modifies REST operations.

- Controller Manager: This part makes the required modifications and ensures your applications’ intended state corresponds with the present state.

- Scheduler: Based on resource availability and requirements, the Scheduler determines which Worker Node your Pod—the smallest deployable unit in Kubernetes—should execute on.

Together, these elements guarantee the smooth operation and constant availability of your apps. It is imperative to comprehend this design to manage a Kubernetes cluster efficiently.

Kubernetes Resources

Creating Your First Pod- The idea of a “Pod” lies at the foundation of Kubernetes. Consider a Pod to be the smallest Kubernetes deployable unit. One or more containers that share the same network and storage resources can be found inside a pod.

Replicating Pods with Replication Controllers- After a pod has been deployed, you should confirm that your application is dependable and accessible. Replication Controllers are useful in this situation.

Deploying with Deployments- Replication Controllers guarantee availability, but deployments handle your applications in a more sophisticated way. By offering declarative updates to apps, they facilitate the scalability and release of new software versions.

Now that the foundation for understanding Kubernetes has been established, you may be asking yourself, “How does this all fit together, and how can I use this digital-first mate for my applications?”

The How About Kubernetes

Bringing Kubernetes to Life – Now that we’ve uncovered the ‘why’ and demystified the ‘what’ of Kubernetes, it’s time for the exciting part – the ‘how’! Imagine you have the tools to orchestrate and manage your digital world seamlessly. Well, with Kubernetes, that dream becomes a reality.

It’s time to roll up your sleeves and unleash the power of Kubernetes in a live cluster. So, let’s dive into the ‘how’ of Kubernetes with enthusiasm and a sense of empowerment. By the end of this journey, you’ll not only understand the ins and outs of Kubernetes but also have the skills to implement it confidently. Get ready for a hands-on experience that will revolutionize the way you navigate the digital landscape!

Let’s make Kubernetes happen!

Step 1: Building a simple application

We are using a simple express application for the demonstration process. In this basic Express application, we have a single endpoint (“/”) that responds with “Hello, Kubernetes!” when accessed. This is a minimal microservice example that we’ll use to demonstrate the deployment process.

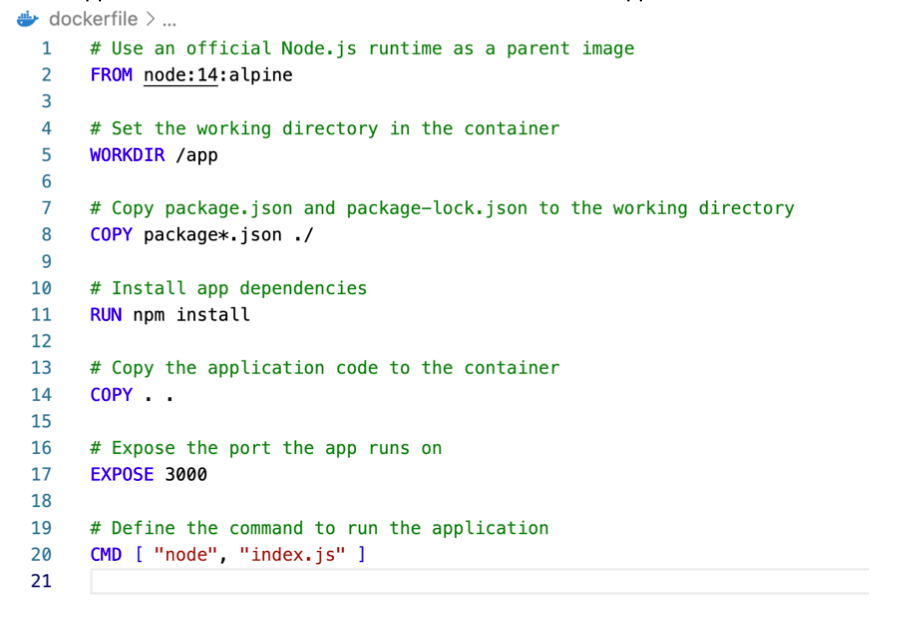

Step 2: Dockerizing our App

Dockerizing the application involves creating a Docker image to encapsulate the Node.js application along with its dependencies. The Dockerfile specifies the base image, sets up the working directory, installs dependencies, copies the application code, and defines the command to start the application.

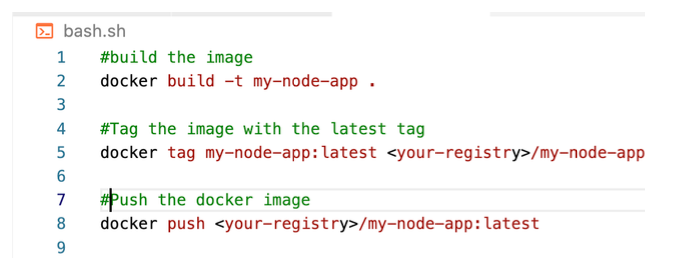

Step 2.1: Pushing your App to DockerHub

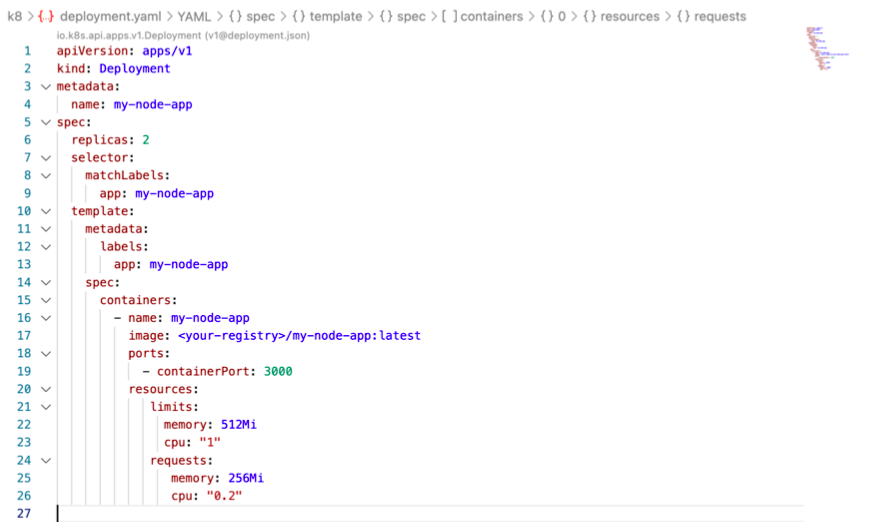

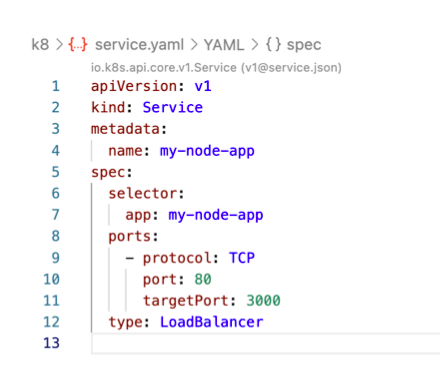

Step 3: Building Kubernetes Manifests Files

The deployment manifest describes how many instances (replicas) of the application should run. The service manifest defines how external traffic should reach the deployed instances. In this example, we expose port 80 to external traffic, which gets directed to the application’s port 3000.

# service.yaml

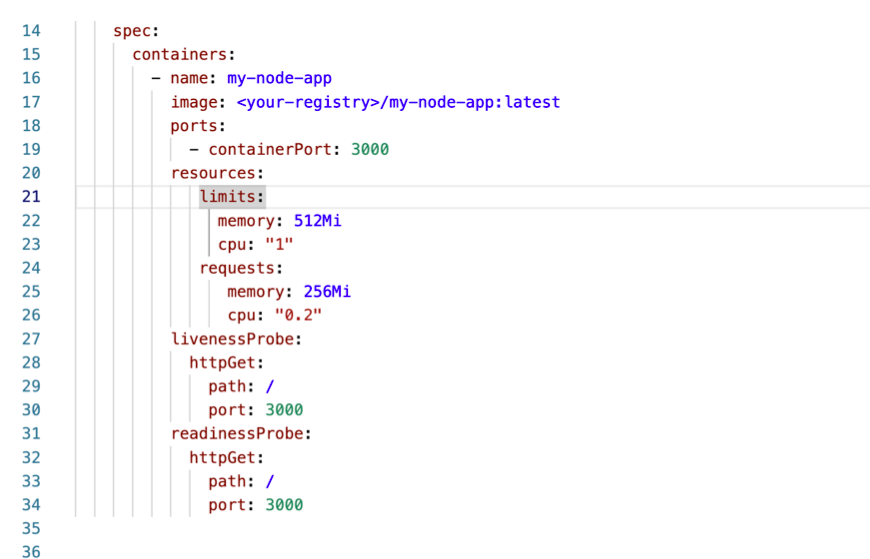

Step 4: Configuring Health Checks and Probes

Kubernetes health checks (livenessProbe and readinessProbe) ensure that the application is running and ready to receive traffic. In this example, both probes make HTTP requests to the root (“/”) path on port 3000 to determine the application’s health.

# deployment.yaml

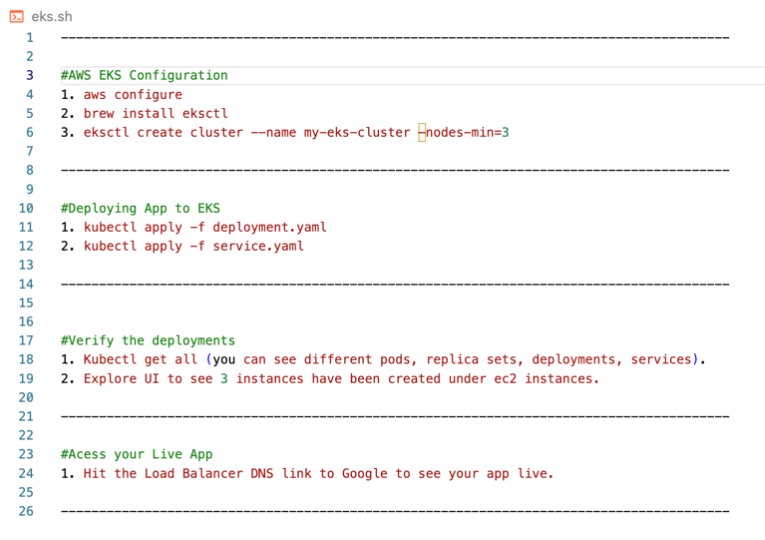

Step 5: Deploying to Production Grade Cluster on AWS

This process involves configuring AWS, creating an EKS cluster named “my-eks-cluster” with a minimum of 3 nodes, and deploying an application using Kubernetes. The deployment is verified with Kubectl get-all, and AWS UI confirms the creation of EC2 instances. Access the live app via the provided Load Balancer DNS link, completing the deployment to a production-grade AWS EKS cluster.

Congratulations, you just did it 🙂

A Journey’s End

We have now completed our exploration of Kubernetes, having looked at the “why” behind the adoption of the technology giants, the “what” of Kubernetes, and the “how” of putting it into practice in a live cluster. We’ve dispelled the mystery surrounding Kubernetes’ orchestration capabilities, enabling services and apps to play beautifully in the digital orchestra.

So, whether you’re a seasoned developer, an IT enthusiast, or someone just dipping their toes into the tech waters, understanding Kubernetes can open doors to a world of possibilities. It’s a tool that empowers businesses to innovate faster, scale effortlessly, and navigate the complexities of modern IT landscapes.

Keep in mind that this is just the beginning of your trip as you explore the world of Kubernetes. Because the tech industry is always changing, it’s important to remain inquisitive and flexible. Continue learning and exploring as you embrace Kubernetes’ transformative capacity to shape the future of digital infrastructure.

May your containers always run smoothly, and may your applications dance harmoniously in the orchestration symphony 🙂

Happy exploring!